What If AI Agents Could Fix MLOps Forever? A Thought Experiment

I was debugging a model deployment at 2 AM last week when a wild thought hit me: What if we could automate literally everything in the ML pipeline using intelligent agents that call our existing tools?

The frustration was real. Our customer churn model had silently degraded over three weeks because of data drift we didn’t catch. The handoff between our data science team and DevOps was broken—again. I was manually checking Kubernetes logs, cross-referencing MLflow experiments, and trying to figure out why our Prometheus alerts didn’t fire.

That’s when it hit me: What if AI agents could orchestrate all these tools through something like MCP (Model Context Protocol) and handle this entire mess autonomously?

Not just the usual MLOps automation we talk about—CI/CD for models, automated testing, monitoring dashboards. I mean everything. What if agents could seamlessly interact with MLflow, call Kubernetes APIs, query your Weights & Biases experiments, and orchestrate your entire ML stack through intelligent tool calling?

Let me walk you through this thought experiment and see where it takes us.

My MLOps Frustrations (Maybe Yours Too?)

Before diving into the “what if” scenario, let me share what triggered this thought experiment. Maybe you’ll recognize these pain points:

The Tool Coordination Nightmare: We have amazing MLOps tools—MLflow for experiments, Kubernetes for deployment, Evidently AI for drift detection, Weights & Biases for tracking. But getting them to work together? That’s where humans become the glue, manually checking one tool and then updating another.

The 3 AM Alert Problem: Our Prometheus alerts fire after something’s already broken. By the time I get the notification, users have been getting bad predictions for hours. What if an agent could predict these issues and fix them before they impact users?

The Silent Drift Disaster: Data drift is like carbon monoxide poisoning for ML models—silent and deadly. Our monitoring caught it three weeks too late. What if an agent was constantly checking and could trigger retraining automatically?

The Manual Orchestra: Every MLOps workflow requires a human conductor. Check data quality in Great Expectations, trigger training in MLflow, monitor in Grafana, deploy via Kubernetes. What if AI agents could be that conductor?

This technical debt compounds faster than we can manage it, and I started wondering: What if intelligent agents could orchestrate our entire MLOps tool ecosystem?

Thought Experiment: What Would Agent-Driven MLOps Look Like?

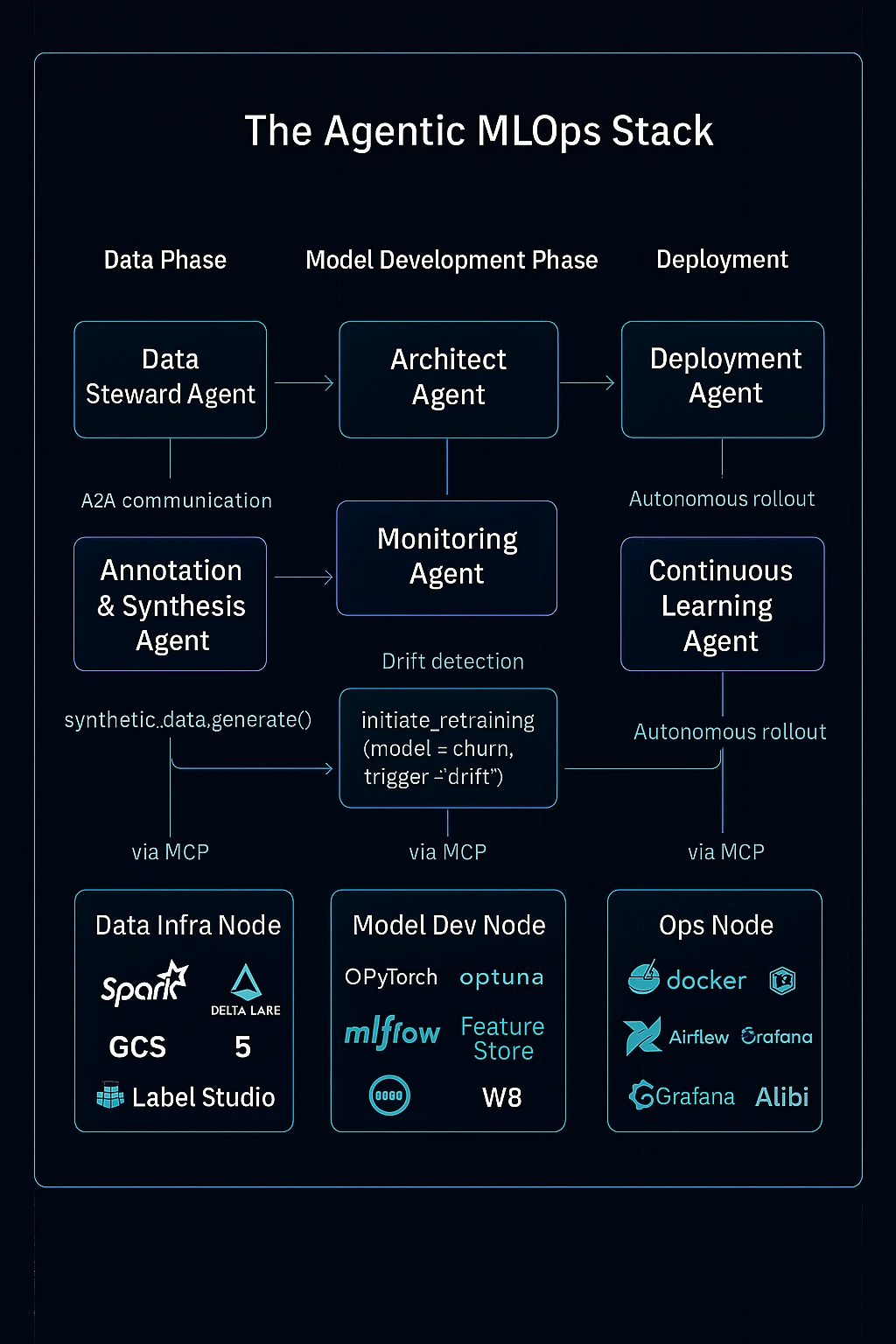

Let’s imagine we could build specialized AI agents that interact with your existing MLOps tools through standardized protocols like MCP. What would that dream team look like?

Here’s my vision for the future of automated MLOps with intelligent tool-calling agents:

| Future AI Agent | Tool Integrations | What This Could Enable |

|---|---|---|

| Data Guardian Agent | DVC, Great Expectations, Evidently AI | Autonomous data quality and drift monitoring |

| Synthesis Agent | Synthetic data tools, labeling platforms | On-demand training data generation |

| Architect Agent | MLflow, Weights & Biases, Optuna | Autonomous model architecture discovery |

| Optimization Agent | Hyperopt, Ray Tune, ClearML | Intelligent hyperparameter optimization |

| Deployment Agent | Kubernetes, Docker, MLflow Model Registry | Risk-free automated deployments |

| Sentinel Agent | Prometheus, Grafana, DataDog | Predictive monitoring and alerting |

| Evolution Agent | CI/CD tools, experiment tracking platforms | Orchestrated continuous learning |

What if these agents could seamlessly call tools through a unified interface? Imagine an agent that could query your Weights & Biases experiments, analyze results, then automatically trigger a new training run with optimized parameters—all while updating your project management tools and notifying your team.

Phase 1: What If Data Management Was Completely Tool-Integrated?

Imagine your Data Guardian Agent continuously monitoring your data pipelines through intelligent tool calling:

# What if data quality monitoring was this autonomous?

async def autonomous_data_monitoring():

# Check data quality via Great Expectations

quality_report = await call_tool("great_expectations", {

"action": "run_checkpoint",

"checkpoint": "daily_data_quality"

})

# Detect drift via Evidently AI

drift_analysis = await call_tool("evidently_ai", {

"action": "analyze_drift",

"reference_data": "production_baseline.csv"

})

if drift_analysis["drift_detected"]:

# Auto-trigger retraining pipeline

await call_tool("prefect", {

"action": "trigger_flow",

"flow_name": "emergency_retrain"

})What if data drift detection was predictive instead of reactive? This agent wouldn’t just catch drift after it happens—it could analyze trends across your entire data ecosystem and proactively prepare for changes.

What if synthetic data generation became as easy as natural language requests? Your Synthesis Agent could interact with generative tools to create exactly the training data you need, automatically integrating it into your training pipelines through DVC or your data versioning tool of choice.

Phase 2: What If Model Development Was Fully Tool-Orchestrated?

Here’s where the thought experiment gets really exciting. What if your Architect Agent could orchestrate experiments across your entire ML tool stack?

# What if architecture search was this intelligent?

async def autonomous_architecture_search(objective: str):

# Start experiment in Weights & Biases

experiment = await call_tool("wandb", {

"action": "create_experiment",

"project": "auto_architecture_search"

})

# Launch distributed search via Ray

search_job = await call_tool("ray", {

"action": "submit_job",

"script": "nas_search.py",

"resources": {"cpu": 8, "gpu": 2}

})

# Get best model from MLflow

best_model = await call_tool("mlflow", {

"action": "get_best_model",

"experiment_id": search_job["experiment_id"]

})

return best_modelGoogle’s AmoebaNet discovery proved that evolutionary algorithms can find better architectures than humans design. What if this was just the beginning? What if agents could coordinate between Optuna for hyperparameters, Ray for distributed compute, and MLflow for experiment tracking—all autonomously?

What if your optimization workflows could span multiple tools seamlessly? The Optimization Agent could intelligently coordinate between Bayesian optimization frameworks, distributed computing platforms, and experiment tracking systems.

Phase 3: What If Production Was Fully Autonomous Through Tool Integration?

This is where the vision gets wild. What if your production environment was orchestrated by agents that seamlessly integrate with your entire infrastructure stack?

# What if deployments were this intelligent?

async def autonomous_model_deployment(model_uri: str):

# Health check the cluster

cluster_health = await call_tool("kubernetes", {

"action": "get_cluster_health",

"namespace": "ml-production"

})

if cluster_health["status"] != "healthy":

await call_tool("pagerduty", {

"action": "create_alert",

"message": "Cluster unhealthy, deployment paused"

})

return False

# Start canary deployment

deployment = await call_tool("kubernetes", {

"action": "create_canary_deployment",

"model_uri": model_uri,

"traffic_split": 0.05

})

# Monitor performance via Prometheus

metrics = await call_tool("prometheus", {

"action": "query_metrics",

"query": "model_accuracy{deployment='canary'}"

})

if metrics["accuracy"] < 0.95:

# Auto-rollback if performance drops

await call_tool("kubernetes", {

"action": "rollback_deployment",

"deployment_id": deployment["id"]

})

return False

# Promote to full production

await call_tool("kubernetes", {

"action": "promote_canary",

"deployment_id": deployment["id"]

})

return TrueWhat if rollbacks were so smart they were preventive? The Deployment Agent could detect subtle performance anomalies through Prometheus or DataDog before they become user-facing issues and proactively switch to a backup model.

What if your Sentinel Agent could predict model failures by analyzing patterns across monitoring tools? It could coordinate between Grafana dashboards, Prometheus metrics, and application logs to forecast issues and automatically trigger the Evolution Agent for preventive retraining.

What if the Evolution Agent orchestrated continuous improvement across your entire tool ecosystem? It could constantly run A/B tests through your experimentation platform, automatically promote winners via MLflow Model Registry, and retire underperformers—all while keeping your team informed through Slack or your preferred communication tool.

The Human-Agent Superpower: What If We Became ML Strategists?

Here’s where I think the real magic happens: What if the combination of human intuition and agent automation gave us ML superpowers?

Instead of choosing between full automation or manual processes, what if we created a partnership where humans focus on strategic decisions while agents handle the execution? I’m not talking about replacing humans—I’m talking about amplifying human intelligence with tireless AI assistants.

What if you could think at the speed of intuition and execute at the speed of automation?

Picture this workflow:

- You wake up with an idea: “What if we retrain our recommendation model to be more conservative during economic uncertainty?”

- You tell your agent team the goal and constraints

- They coordinate across your entire tool stack to test this hypothesis

- You review results over coffee and make the strategic call

- Agents handle the implementation across production

What if humans became the “why” and agents became the “how”?

Instead of being the glue between disparate tools, we’d become ML strategists—setting the vision, defining the constraints, and making the nuanced decisions that require business context. What if our job became asking the right questions and making strategic calls while agents handled the orchestration?

- “Should we optimize for accuracy or fairness in this hiring model?”

- “What are the ethical boundaries for our recommendation algorithms?”

- “How do we balance personalization with privacy during this campaign?”

New hybrid roles would emerge: ML Strategy Directors who guide agent teams toward business outcomes, and AI-Human Interface Designers who optimize how humans and agents collaborate most effectively.

What if the most valuable skill became the ability to think strategically while your agent team executes flawlessly across your entire MLOps ecosystem?

The Technology Stack: What If This Could Actually Work?

While this is still largely a thought experiment, the building blocks are emerging. Open-source frameworks like LangChain and CrewAI are starting to enable multi-agent coordination. MCP (Model Context Protocol) could standardize how agents interact with tools. Enterprise platforms are building more API-driven interfaces.

What if we combined these trends with advances in AI reasoning and tool calling? The technology for autonomous MLOps orchestration might be closer than we think.

From Thought Experiment to Reality (Shameless Plug Alert!)

Here’s where this gets personal: I was so excited about this idea that same night at 2 AM, I couldn’t sleep. So instead of going back to bed, I built a proof of concept.

I created clearml-mcp - a Model Context Protocol server that lets AI agents interact with ClearML for experiment tracking and model management. It’s a tiny first step toward this vision, but it works! What if this was just the beginning?

The repo shows how an agent could theoretically:

- Query your ClearML experiments

- Create new training tasks

- Monitor model performance

- Manage your model registry

It’s basic, but it proves the concept: AI agents can already start orchestrating our ML tools through standardized protocols.

What if more people started building MCP servers for their favorite MLOps tools? Imagine agents that could coordinate between MLflow, Weights & Biases, Kubernetes, Prometheus, and your entire stack through standardized interfaces.

The Wild Conclusion: What If This Changes Everything?

Here’s the thought that keeps me up at night: What if fully automated MLOps through intelligent tool orchestration isn’t just about efficiency—what if it completely changes what’s possible with machine learning?

If agents could seamlessly coordinate your entire MLOps tool ecosystem, if deployment became risk-free, if models continuously evolved through automated tool workflows… what kinds of AI applications become feasible that we can’t even imagine today?

Maybe every small business could have world-class ML capabilities orchestrated by agents. Maybe we could solve problems that currently require massive MLOps teams with just a few intelligent tool-calling agents. Maybe the constraint on ML innovation shifts from tool coordination complexity to creative problem formulation.

What if ML operations became as seamless as calling an API?

Your Turn: What Do You Think?

This started as a frustrated thought at 2 AM, but it’s becoming a vision that feels increasingly plausible. What if you could start building pieces of this today?

Maybe you start with one agent that coordinates between your monitoring tools, or experiment with AI-assisted experiment tracking across MLflow and Weights & Biases. Maybe you explore what tool orchestration could mean for your specific MLOps stack.

What would your ideal autonomous MLOps system look like? What would become possible if AI agents could seamlessly orchestrate your entire ML tool ecosystem?

The future of AI-driven MLOps might be closer than we think—and it might be even more transformative than we imagine.

Related Posts

Agent Frameworks Ain't Agent Building

Build agents from the inside out — start with behavior in CLAUDE.md, add capabilities via MCP, then wrap in code with the Claude Agent SDK.

Repowire: A Pull-Based Context Breakout

What if coding agents could ask each other questions directly? Introducing repowire, a mesh network that lets Claude Code sessions communicate in real-time.

The Vibe Bottleneck

Coding agents are great at understanding repositories. But when work spans multiple repos, you become the message transmitter, the slowdown, and the source of errors.