Agent & ML Infrastructure

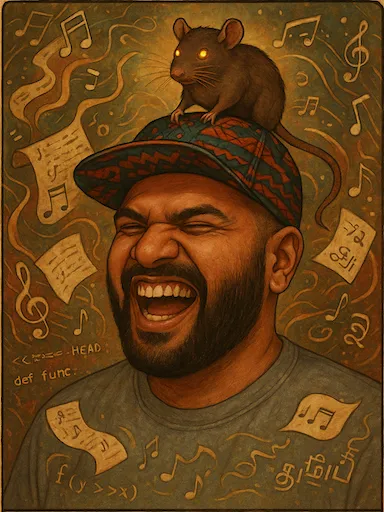

Prassanna

Ravishankar

Architecting infrastructure for the Agentic Web.

Synthesizing Systems, Intelligence, and the Poetic.

The Signal

Latest Syntheses

Systems // Intelligence // Poetics

Conversations

LISTEN →The Signal & The Silence

Decoding the signals of tomorrow. From neural networks to philosophical voids.